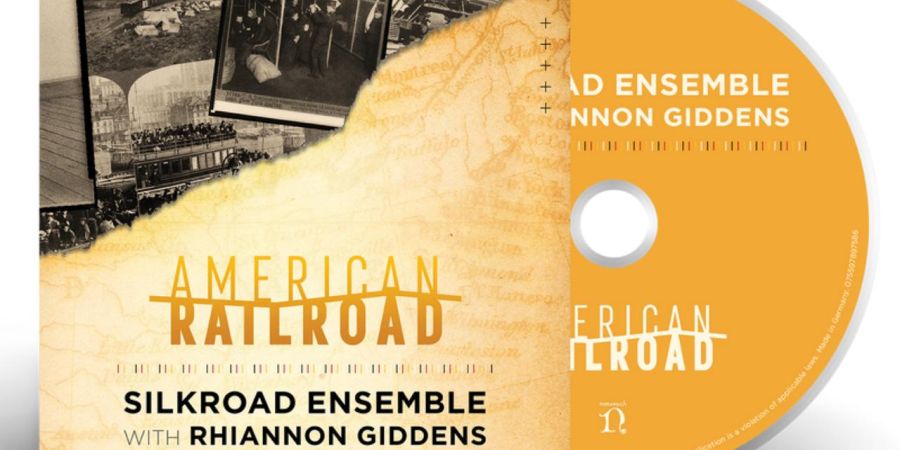

Rhiannon Giddens' 'American Railroad' Celebrates Immigrant Workers' Contributions

- 27/11/2024

- 5 comments

- 36

- 125

Marc Saikali 16:30

This is Beirut 15:00

Michel Touma 14:50

Nisrine Merheb 14:05

This is Beirut 13:55

This is Beirut 15:50

This is Beirut 14:40

This is Beirut 13:55

This is Beirut 10:35

This is Beirut 09:45

Rayan Chami 11:20

This is Beirut 26/11 21:10

This is Beirut 26/11 18:10

Liliane Mokbel 16:00

This is Beirut 15:55

Christiane Tager 10:00

This is Beirut 26/11 11:40

Liliane Mokbel 23/11 12:00

This is Beirut 17:00

This is Beirut 13:20

This is Beirut 10:30

This is Beirut 26/11 18:00

This is Beirut 26/11 14:00

This is Beirut 11:55

This is Beirut 09:15

Makram Haddad 26/11 19:30

Pierre Daccache 26/11 11:25

Alain E. Andrea 15/11 16:21

Vanessa Kallas 14/11 10:35

This is Beirut 06/11 19:50

Alain E. Andrea 22/10 17:49

This is Beirut 16/10 15:40

par Ici Beyrouth, 17:24

par Ici Beyrouth, 17:00

par Ici Beyrouth, 16:40

par Ici Beyrouth, 16:30

par Ici Beyrouth, 15:50